torch allclose

torch cat

torch expand

torch from_numpy

torch gather

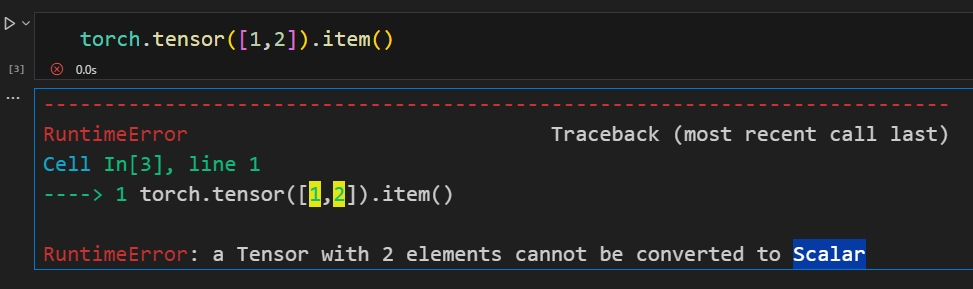

torch item

torch linspace

torch log

torch masked_select

torch masked_fill_

torch max

torch rand

torch round

torch round

torch.softmax

torch sort

torch squeeze

torch topk

torch topk

torch tril

torch unsqueeze

torch zeros

torch 随机数

参考

torch allclose

参数必须是tensor

import torch a=torch.tensor(1e-08,dtype=torch.float32) b=torch.tensor(0,dtype=torch.float32) torch.allclose(a,b)

torch cat

import torch

a = torch.arange(6).reshape(2,3)

b = torch.arange(6).reshape(2,3)

torch.cat((a,b),dim=0)

tensor([[0, 1, 2],

[3, 4, 5],

[0, 1, 2],

[3, 4, 5]])

torch expand

singleton dimensions expand

expand英/ɪkˈspænd/美/ɪkˈspænd/ v.扩大;扩展(业务);增加;增强(尺码、数量或重要性); Returns a new view of the :attr:`self` tensor with singleton dimensions expanded to a larger size. Passing -1 as the size for a dimension means not changing the size of that dimension. Tensor can be also expanded to a larger number of dimensions, and the new ones will be appended at the front. For the new dimensions, the size cannot be set to -1.

从一个维度扩展到多维度

import torch

torch.manual_seed(73)

a = torch.rand(5)

a

tensor([0.5286, 0.1616, 0.8870, 0.6216, 0.0459])

a.expand(5,5)

tensor([[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459]])

a.expand(2,5,5)

tensor([[[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459]],

[[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459],

[0.5286, 0.1616, 0.8870, 0.6216, 0.0459]]])

从多维扩展到多维

shape是多维度,但数据仍是只有一个向量

a = torch.rand(1,5)

a

tensor([[0.3856, 0.2258, 0.7837, 0.2052, 0.1868]])

b=a.expand(5,5)

b

tensor([[0.3856, 0.2258, 0.7837, 0.2052, 0.1868],

[0.3856, 0.2258, 0.7837, 0.2052, 0.1868],

[0.3856, 0.2258, 0.7837, 0.2052, 0.1868],

[0.3856, 0.2258, 0.7837, 0.2052, 0.1868],

[0.3856, 0.2258, 0.7837, 0.2052, 0.1868]])

a = torch.rand(1,1,1,5)

a

tensor([[[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]]]])

c = a.expand(-1,-1,5,5)

c

tensor([[[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]]]])

c = a.expand(1,1,5,5)

c

tensor([[[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]]]])

c = a.expand(-1,2,5,5)

c

tensor([[[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]],

[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]]]])

c = a.expand(1,2,5,5)

c

tensor([[[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]],

[[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562],

[0.9023, 0.9923, 0.4589, 0.7409, 0.4562]]]])

torch from_numpy

二者数据为同一地址

import numpy as np import torch from torch import nn a = np.array([0.1, 0.2, 0.3]) t = torch.from_numpy(a) t[0]=0.3 print(a) # [0.3 0.2 0.3] print(t.dtype) # torch.float64 t = t.float() print(t.dtype) # torch.float32 numpy的浮点数默认为float64, torch的浮点数默认为float32

torch gather

gather 英/ˈɡæðə(r)/ 美/ˈɡæðər/ v.搜集,收集,采集,集合 https://pytorch.org/docs/stable/generated/torch.gather.html torch.gather(input, dim, index, *, sparse_grad=False, out=None) → Tensor

2维

import torch

from torch import nn

t = torch.tensor([[1, 2], [3, 4]])

torch.gather(t, 1, torch.tensor([[0, 0], [1, 0]]))

tensor([[1, 1],

[4, 3]])

dim=1看矩阵得到[1,2]与[3,4]两个向量

[1,2]索引0上的数据是1

[3,4]索引0上的数据是3,索引1上的数据是4

注意:

索引的shape必须与原矩阵shape相同

比如,要取原矩阵第1行第1列上的数据,

那么,索引必须也要体现出行与列,那它就必定也是2维矩阵

指定维度上按索引取值且至少取一个值,故gather不会改变矩阵维度

import torch

from torch import nn

t = torch.tensor([[1], [3]])

torch.gather(t, 1, torch.tensor([[0], [0]]))

tensor([[1],

[3]])

原矩阵是2维,第2维只有一个元素,

并且要求第2维上必须取出一个元素,

那么使用gather取值,结果必定与原矩阵相同

3维

out[i][j][k] = input[index[i][j][k]][j][k] # if dim == 0 out[i][j][k] = input[i][index[i][j][k]][k] # if dim == 1 out[i][j][k] = input[i][j][index[i][j][k]] # if dim == 2

torch item

import torch

torch.tensor(1).item()

1

torch.tensor([1]).item()

1

torch linspace

[start,end]范围内,取steps个数,包含start,end

import torch torch.linspace(start=1,end=6,steps=6) tensor([1., 2., 3., 4., 5., 6.])

import torch import pandas as pd a=torch.linspace(start=1,end=120,steps=120).reshape(30,4) a.shape #torch.Size([30, 4])

torch log

import torch

probs = torch.tensor([[0.9, 0.1], [0.1, 0.9], [0.8, 0.2]])

probs

tensor([[0.9000, 0.1000],

[0.1000, 0.9000],

[0.8000, 0.2000]])

torch.log(probs)

tensor([[-0.1054, -2.3026],

[-2.3026, -0.1054],

[-0.2231, -1.6094]])

torch.log(torch.tensor(2.71828)) # tensor(1.0000)

$ y_{i} = \log_{e} (x_{i})$

𝑦𝑖=log𝑒(𝑥𝑖)

loge,也写作ln, 是一个单调函数,

在x=1时,其值为0,

在x=e时,其值为1,

记住这两个特殊的值,加上它的单调性,就可以推出:

ln,或者log2是一个增函数

x< 1时,其值小于0,

对于一个正数,以1为界,互为倒数的两组数记为x,1/x

将对数函数的底数记为a(a>0),记b=1/a

当a>1时,loga(x)是一个增函数,过(1,0),(a,1)两点

logb(x) = ln(x)/ln(b)=ln(x)/ln(1/a)=(-1)(ln(x)/ln(a))=ln(1/x)/ln(a)

=loga(1/x)

对应的,logb(x)就是一个减函数,过(b,1),(1,0)两点

torch masked_select

masked_select,从矩阵中选取符合条件的数据排成一列

import torch

from torch import nn

t = torch.tensor([[1, 2, 3, 4, 0], [4,7,3,0, 0]])

mask=torch.zeros(t.shape)

mask = t!=mask

mask

tensor([[ True, True, True, True, False],

[ True, True, True, False, False]])

t.masked_select(mask)

tensor([1, 2, 3, 4, 4, 7, 3])

torch masked_fill_

masked_fill_(mask, value)

将mask矩阵中为true位置的元素替换为value

从tensor中选出(与tensor同shape的)mask中标记为true/1的元素,并替换为指定的value

import torch

torch.manual_seed(73)

mask = torch.randint(low=0,high=2,size=(1,7))

mask

tensor([[0, 0, 0, 0, 0, 0, 1]])

score = torch.randint(low=0,high=7,size=(1,7)).float()

score

tensor([[2., 1., 2., 0., 5., 5., 4.]])

score = score.masked_fill_(mask,-float('inf'))

score

tensor([[2., 1., 2., 0., 5., 5., -inf]])

masked_fill_在注意力中用于将pad标记替换为0,即不计得分 或 注意力为0

import torch

torch.manual_seed(73)

batch_size=2

head_n1=4

seq_len1=5

seq_len2=5

score = torch.randn(batch_size,head_n1,5,5)

mask = torch.randint(low=0,high=2,size=(batch_size,1,1,5))

# [5,5]第二个5指一句话有5个词,第一个5指这句话被复制了5份

mask = mask.expand(batch_size,1,5,5)

mask

tensor([[[[0, 1, 0, 1, 1],

[0, 1, 0, 1, 1],

[0, 1, 0, 1, 1],

[0, 1, 0, 1, 1],

[0, 1, 0, 1, 1]]],

[[[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0],

[0, 0, 0, 0, 0]]]])

#按照mask为1/true位置选出score中的元素,并使用负无穷填充

# mask = [b, 1, 5, 5],[5,5]第二个5指一句话有5个词,第一个5指这句话被复制了5份

score = score.masked_fill_(mask, -float('inf'))

#对score的倒数第一维,即最后一维做softmax

score = torch.softmax(score, dim=-1)

score.shape

torch.Size([2, 4, 5, 5])

score

tensor([[[[0.2033, 0.0000, 0.7967, 0.0000, 0.0000],

[0.7755, 0.0000, 0.2245, 0.0000, 0.0000],

[0.2173, 0.0000, 0.7827, 0.0000, 0.0000],

[0.6572, 0.0000, 0.3428, 0.0000, 0.0000],

[0.6494, 0.0000, 0.3506, 0.0000, 0.0000]],

...

...

...

[[0.2002, 0.0457, 0.0477, 0.6955, 0.0109],

[0.0463, 0.5003, 0.1162, 0.1059, 0.2313],

[0.0274, 0.3112, 0.0732, 0.2900, 0.2983],

[0.0923, 0.1678, 0.5336, 0.0851, 0.1212],

[0.0322, 0.6901, 0.0582, 0.1598, 0.0598]]]])

torch max

求整个矩阵的最大值,即不指定维度

import torch

a = torch.arange(6).reshape(2,3)

a

tensor([[0, 1, 2],

[3, 4, 5]])

torch.max(a)

tensor(5)

max:指定维度

values,index = torch.max(a,dim=1) print(values,index) tensor([2, 5]) tensor([2, 2]) index:所指定维度的“向量/列表”的索引

torch rand

torch.rand

torch.rand(30)

tensor([0.1914, 0.2577, 0.2453, 0.2744, 0.9262, 0.8579, 0.9360, 0.6833, 0.2155,

0.8981, 0.2958, 0.3697, 0.2429, 0.5146, 0.9817, 0.5375, 0.1799, 0.0588,

0.6656, 0.6645, 0.0427, 0.9996, 0.9549, 0.0636, 0.2233, 0.4129, 0.4928,

0.8897, 0.8169, 0.4618])

Returns a tensor filled with random numbers from a uniform distribution

on the interval :math:`[0, 1)`

randint(low=0, high=1, size=(m,n)), [low,high)不包含high

torch.randint(0, 1, (3,7))

tensor([[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0],

[0, 0, 0, 0, 0, 0, 0]])

torch round

tensor.round(decimals=3)

import torch a=torch.tensor(1.234535) a.round(decimals=3) # tensor(1.2350)

torch.softmax

torch.softmax在元素全为-inf负无穷时,并不会转化为0, 至少需要一个数字

import torch

score = torch.randn(1,3)

mask = torch.tensor([1,1,1],dtype=torch.int) ==1

mask = mask.reshape(1,3)

mask

tensor([[True, True, True]])

score = score.masked_fill_(mask, -float('inf'))

score

tensor([[-inf, -inf, -inf]])

torch.softmax(score,dim=-1)

tensor([[nan, nan, nan]])

torch sort

tensor.sort(key=func),排序后改变tensor内部顺序

import torch

pair_batch = [["一些 因 缘 发生 的 事 啊","像 梦幻 泡影 一样 难以捉摸"],

["如 朝露 与 闪电 一样 转瞬即逝","应该 这样 去 看"],

["它 一会 去 去 一会 又 来 来","有的 事 本就 如此 见多不怪"]

]

pair_batch.sort(key=lambda x: len(x[0].split(" ")), reverse=True)

pair_batch

[['它 一会 去 去 一会 又 来 来', '有的 事 本就 如此 见多不怪'],

['一些 因 缘 发生 的 事 啊', '像 梦幻 泡影 一样 难以捉摸'],

['如 朝露 与 闪电 一样 转瞬即逝', '应该 这样 去 看']]

torch squeeze

squeeze英/skwiːz/ 美/skwiːz/ v.挤压;(从某物中)挤出; 削减,紧缩(资金); n.挤压;挤;减少

import torch from torch import nn torch.manual_seed(73) a = torch.rand(1,2,3) a.shape torch.Size([1, 2, 3]) x = torch.squeeze(a) x.shape torch.Size([2, 3])

a = torch.rand(2,1,3) a.shape torch.Size([2, 1, 3]) x = torch.squeeze(a) x.shape torch.Size([2, 3]) a = torch.rand(2,3,1) a.shape torch.Size([2, 3, 1]) x = torch.squeeze(a) x.shape torch.Size([2, 3])

torch topk

import torch

a=torch.tensor([[1,2,3],[1,3,2]],dtype=torch.float32)

max_values,index = a.topk(1)

max_values

tensor([[3.],

[3.]])

index

tensor([[2],

[1]])

torch.gather(a, dim=1, index=index)

tensor([[3.],

[3.]])

torch view

view(1,-1)是二维矩阵,一个维度上为1,一个维度上自动伸缩

import torch a = torch.randn(2,3) a[0].shape torch.Size([3]) a[0].view(1,-1).shape torch.Size([1, 3])

torch tail

import torch

torch.tril(torch.ones(1, 5, 5, dtype=torch.long))

tensor([[[1, 0, 0, 0, 0],

[1, 1, 0, 0, 0],

[1, 1, 1, 0, 0],

[1, 1, 1, 1, 0],

[1, 1, 1, 1, 1]]])

import torch

1-torch.tril(torch.ones(1, 5, 5, dtype=torch.long))

tensor([[[0, 1, 1, 1, 1],

[0, 0, 1, 1, 1],

[0, 0, 0, 1, 1],

[0, 0, 0, 0, 1],

[0, 0, 0, 0, 0]]])

torch unsqueeze

import torch from torch import nn torch.manual_seed(73) a = torch.rand(2,3) a.shape #torch.Size([2, 3]) x = torch.unsqueeze(a,dim=1) x.shape #torch.Size([2, 1, 3])torch zeros

torch zeros

最常见的zeros:2维

import torch

from torch import nn

a=torch.zeros((2,3), dtype=torch.long)

print(a,a.shape)

tensor([[0, 0, 0],

[0, 0, 0]]) torch.Size([2, 3])

a=torch.zeros(2,3, dtype=torch.long)

print(a,a.shape)

tensor([[0, 0, 0],

[0, 0, 0]]) torch.Size([2, 3])

普通的zeros:1维

a=torch.zeros([3], dtype=torch.long) print(a,a.shape) tensor([0, 0, 0]) torch.Size([3])

不常见的zeros:空有其形

a=torch.zeros([0], dtype=torch.long) print(a,a.shape) tensor([], dtype=torch.int64) torch.Size([0]) b = torch.ones(1, 1, dtype=torch.long) * 1 b tensor([[1]]) torch.cat((a,b),dim=0) tensor([[1]])

torch 随机数

torch.rand(*sizes, out=None) → Tensor

torch.randn(*sizes, out=None) → Tensor

torch.normal(means, std, out=None) 注意:没有size参数

torch.normal(mean=torch.arange(1., 11.), std=torch.arange(1, 0, -0.1))

torch.normal(mean=0.5, std=torch.arange(1., 6.))

torch.normal(mean=torch.arange(1., 6.), std=1.0)

torch.normal(mean, std, size, *, out=None) 注意:有size参数

torch.normal(2, 3, size=(1, 4))

import torch

round(torch.normal(mean=torch.tensor(5.), std=torch.tensor(3.)).tolist(),2)

7.35

参考