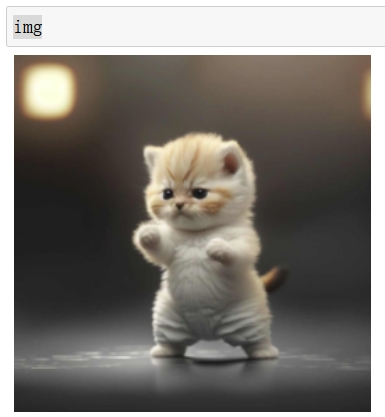

PIL Image

from PIL import Image

img = Image.open("lingmao.jpg")

type(img) #PIL.JpegImagePlugin.JpegImageFile

img.size #(940, 940)

transforms.Resize

import torch from torchvision import transforms img = transforms.Resize(size=(256, 256))(img)

import numpy as np np.array(img).shape #(256, 256, 3)

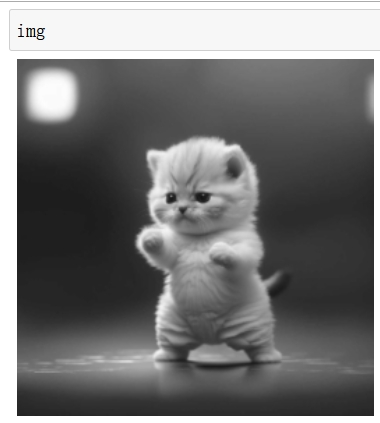

transforms.Grayscale

img = transforms.Grayscale()(img)

np.array(img)

array([[ 85, 86, 87, ..., 100, 100, 100],

[ 86, 87, 87, ..., 100, 100, 100],

[ 87, 87, 87, ..., 100, 100, 100],

...,

[ 38, 38, 38, ..., 40, 39, 38],

[ 36, 36, 36, ..., 39, 38, 38],

[ 36, 36, 36, ..., 38, 38, 38]], dtype=uint8)

transforms.ToTensor

转tensor且转换到[0,1] 之间

transforms.ToTensor()(img)

tensor([[[0.3333, 0.3373, 0.3412, ..., 0.3922, 0.3922, 0.3922],

[0.3373, 0.3412, 0.3412, ..., 0.3922, 0.3922, 0.3922],

[0.3412, 0.3412, 0.3412, ..., 0.3922, 0.3922, 0.3922],

...,

[0.1490, 0.1490, 0.1490, ..., 0.1569, 0.1529, 0.1490],

[0.1412, 0.1412, 0.1412, ..., 0.1529, 0.1490, 0.1490],

[0.1412, 0.1412, 0.1412, ..., 0.1490, 0.1490, 0.1490]]])

直接除以255,再取小数点后4位有效数字

100/255 = 0.39215686274509803 38/255 = 0.14901960784313725

transforms.Normalize

img = transforms.ToTensor()(img) type(img) # torch.Tensor

按特征维度进行归一化,即批归一化

#output[channel] = (input[channel] - mean[channel]) / std[channel] transforms.Normalize(mean=[0.5], std=[0.5])(img)

tensor([[[-0.3333, -0.3255, -0.3176, ..., -0.2157, -0.2157, -0.2157],

[-0.3255, -0.3176, -0.3176, ..., -0.2157, -0.2157, -0.2157],

[-0.3176, -0.3176, -0.3176, ..., -0.2157, -0.2157, -0.2157],

...,

[-0.7020, -0.7020, -0.7020, ..., -0.6863, -0.6941, -0.7020],

[-0.7176, -0.7176, -0.7176, ..., -0.6941, -0.7020, -0.7020],

[-0.7176, -0.7176, -0.7176, ..., -0.7020, -0.7020, -0.7020]]])

参考