VggNet

VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE -SCALE IMAGE RECOGNITION

arXiv:1409.1556v6 [cs.CV] 10 Apr 2015

Karen Simonyan∗ & Andrew Zisserman+

Visual Geometry Group, Department of Engineering Science, University of Oxford

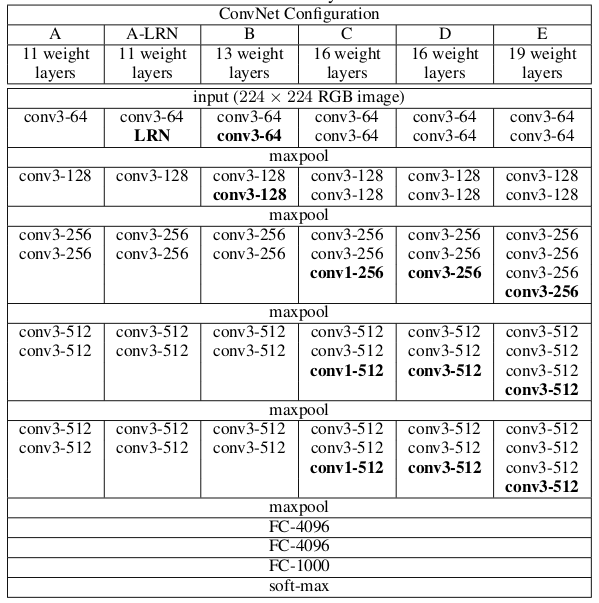

Table 1: ConvNet configurations (shown in columns).

The depth of the configurations increases from the left (A) to the right (E),

as more layers are added (the added layers are shown in bold).

The convolutional layer parameters are denoted as “convhreceptive field sizei-hnumber of channelsi”.

The ReLU activation function is not shown for brevity.

vgg16 D conv3-64:核为3,卷积将特征变换到64,或者说是从64个维度提取数据特征 conv3-64: maxpool:使用maxpool收缩特征图 conv3-128:核为3,卷积将特征变换到128,或者说是从128个维度提取数据特征 总体来说,VGG是用于大量图像识别的,从A到E参数变多,可以识别的图像量就越大

卷积的层数: 卷积与全连接算一层, maxpool,BN,RuLE等不算层,算组件 conv1-512与conv3-512 1与3表示卷积核为1与3: conv1-512:kernel_size=1,stripe=1,padding=0 conv3-512:kernel_size=3,stripe=1,padding=1 通道变换,in_channels,out_channels,控制特征的变换 卷积核控制滑动取窗,由kernel_size,stripe,padding三个参数控制 其他 LRN是类似于BN功能的一个组件,但没有发展起来 vgg16,19较常用 |

import torch

from torch import nn

class VggNet(nn.Module):

"""

自定义Vgg网络

"""

def __init__(self):

super(VggNet, self).__init__()

# 提取特征

self.features = nn.Sequential(

# stage1

nn.Conv2d(in_channels=3, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=64),

nn.ReLU(),

nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=64),

nn.ReLU(),

# maxpool

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

# stage2

nn.Conv2d(in_channels=64, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=128),

nn.ReLU(),

nn.Conv2d(in_channels=128, out_channels=128, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=128),

nn.ReLU(),

# maxpool

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

# stage3

nn.Conv2d(in_channels=128, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=256),

nn.ReLU(),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=256),

nn.ReLU(),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=256),

nn.ReLU(),

nn.Conv2d(in_channels=256, out_channels=256, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=256),

nn.ReLU(),

# maxpool

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

# stage4

nn.Conv2d(in_channels=256, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

# maxpool

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

# stage5

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

nn.Conv2d(in_channels=512, out_channels=512, kernel_size=3, stride=1, padding=1),

nn.BatchNorm2d(num_features=512),

nn.ReLU(),

# maxpool

nn.MaxPool2d(kernel_size=2, stride=2, padding=0),

)

# 统一形状

self.avgpool = nn.AdaptiveAvgPool2d(output_size=(7, 7))

# 做分类

self.classifier = nn.Sequential(

nn.Dropout(p=0.5),

nn.Flatten(),

nn.Linear(in_features=25088, out_features=4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(in_features=4096, out_features=4096),

nn.ReLU(),

nn.Dropout(p=0.5),

nn.Linear(in_features=4096, out_features=1000)

)

def forward(self, x):

x = self.features(x)

x = self.avgpool(x)

o = self.classifier(x)

return o

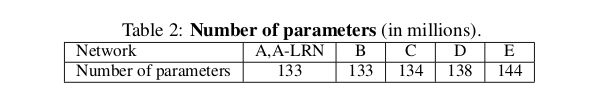

imgs = torch.randn(3,3,224,224) model = VggNet() model(imgs).shape 参数量 sum(x.numel() for x in model.parameters()) 143678248 |

|

主体流程 卷积提取特征:卷啊卷,激活,池化 # 统一形状 self.avgpool = nn.AdaptiveAvgPool2d(output_size=(7, 7)) 全连接分类 Dropout 因为信息量大,dropout起到弱化过拟合的作用 信息量 VGG给出一种提示/暗示/方式,处理数据的信息量大,那么对应的网络层数也多 结构顺序 同时,VGG进一步遵从了 卷积 -- BN - RELU -- MAXPOOL 这种结构顺序 但个人(仅个人观点),认为 卷积 -- MAXPOOL - RELU - BN 更符合各层的含义 |

|

|

|

|

参考